Is Synthetic Data the Key to AGI?

The data wars are just beginning. Part 2 in a series of essays on AI.

This is part 2 in a series on AI. The first part can be found here.

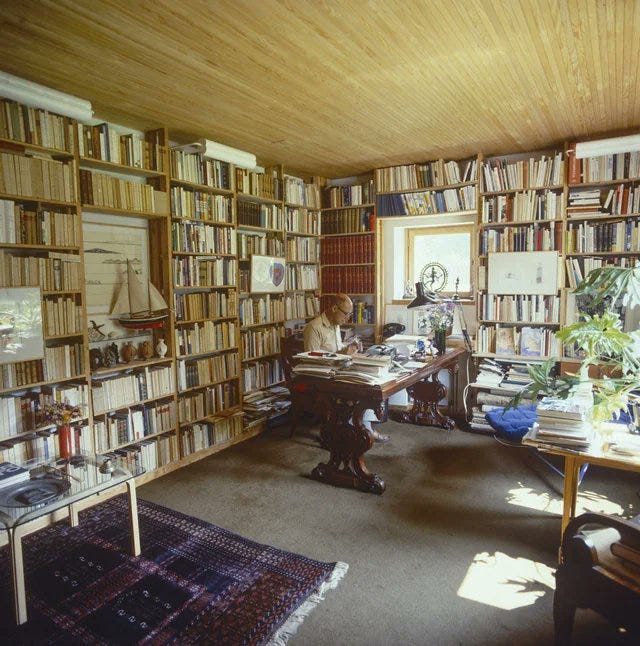

LLMs are trained on vast amounts of data, many libraries’ worth. But what if we run out? Image source: Twitter

Not Enough Data?

One key fact about modern large language models (LLMs) can be summarized as follows: it’s the dataset, stupid. AI model behavior is largely determined by the dataset it’s trained on; other details, such as architecture, are simply a means of delivering computing power to that dataset. Having a clean, high-quality dataset is worth a lot. [1]

The centrality of data is reflected in AI business practice. OpenAI recently announced deals with Axel Springer, Elsevier, the Associated Press, and other publishers and mass media companies for their data; the New York Times (NYT) recently sued OpenAI, demanding that its GPTs, trained on NYT data, be shut down. And Apple is offering $50 million plus for data contracts with publishers. At current margins, models benefit more from additional data than they do from additional size.

The expansion in size of training corpora has been rapid. The first modern LLM was trained on Wikipedia. GPT-3 was trained on 300 billion tokens (typically words, parts of words, or punctuation marks), and GPT-4 on 13 trillion. Self-driving cars are trained on thousands of hours of video footage; OpenAI Copilot, for programming, is trained on millions of lines of human code from the website Github.

Can this go on forever? A 2022 study published on arXiv suggests that we are “within one order of magnitude of exhausting high-quality data, and this will likely happen between 2023 and 2027.” (High-quality data means a combination of sources such as Wikipedia, the news, code, scientific papers, books, social media conversations, filtered web pages, and user-generated content, such as that from Reddit.)

The study estimates that this stock of high quality data is about 9e12 words in size and is growing at 4 to 5 percent per year. What’s 9e12? For comparison, the complete works of Shakespeare are around 900,000 words (9e5) – so 9e12 means 10 million times the size of the complete works of Shakespeare.

Rough estimates indicate that we may need 5 to 6 orders of magnitude more data – that’s 100,000 to 1,000,000 times more – than what we have to achieve true human-level AI.

Recall that GPT-4 used 13 trillion tokens. There is a good amount of useful data we can extract from yet untapped sources – for example, audio and video recordings, non-English data sources, emails, text messages, tweets, undigitized books, or private enterprise data. From these, we may be able to get another 10 times even 100 times more than the amount of useful data we currently have, but we’re unlikely to get 100,000 times more.

In short, we don’t have enough.

On top of that, existing headwinds may make quality data acquisition even trickier:

User-generated content websites (Reddit, Stack Overflow, Twitter/X) are shutting their pipes and charging expensive licenses for their data.

Writers, artists, and even the New York Times are making copyright claims objecting to their work being used by LLMs.

Some speculate that the internet is filling up with lower-quality LLM-generated content, which could cause the LLMs to ‘drift’ and lower response quality.

Synthetic Data to the Rescue?

Our pessimistic conclusion from the preceding analysis would be that we don’t have enough data to train superintelligence. But that would be premature. The key may be in the production of synthetic data – data generated by machines for the purpose of self-training.

It sounds like a myth, but in fact several key modern AIs have been trained using synthetic data:

AlphaZero, the chess-playing AI, was trained on synthetic data. The data was generated by having the AI play against itself, and then learn from its mistakes. (This is synthetic in the sense that it didn’t require looking at real human chess games.)

OpenAI’s recent synthetic video model, Sora, which can generate artificial videos of up to 1 minute with a simple text prompt, was likely trained on synthetic data generated by a video game engine (most likely Unreal Engine 5). In other words, Sora did not just learn about the world through YouTube videos or movies showing real-world settings: the game engine generated artificial landscapes, and Sora learned from those as well.

So, the technique has been proven through chess and through video; the question is whether it will also work for text. Producing high quality video data for training is, in some ways, far easier than generating text for training: anybody with an iPhone can take video that captures reality as it is. But for synthetic text to be useful for training, it has to be high-quality, interesting, and in some sense “true.”

One key point is that creating useful synthetic data does not just involve generating text from scratch. For example, a recent (January 2024) paper shows that LLM rephrasing of existing web-scraped data in a better style improves training and makes it more efficient. In some cases, significant gains in LLM performance can be achieved by simply identifying the worst-quality data in your dataset and removing it (referred to as “dataset pruning:). A paper on image data shows that omitting the least informative 90 percent (!) of the dataset is required to achieve peak model performance.

Other tailwinds will push machine learning research along the scaling curves discussed in the previous post. We now have LLMs that can watch and learn from videos, much as human children do. As we figure out how to obtain higher quality, multimodal data (video, audio, images, and text), we may discover that less data is required than we thought for LLMs to fill in what’s missing from their models of the world.

Implications

Progress will accelerate greatly if we crack this: Considering the number of researchers currently working on synthetic data development, the massive incentive to solve this problem, and the fact that it’s already worked in several other domains, we should expect significant advances in the next few months or years, accelerating the already-high rate of progress. These advances are likely to remain trade secrets.

Internet business models may change, moving away from advertising: Internet companies, previously driven primarily by advertising, may invent new business models focused around the generation of training data. The internet company Reddit, which filed its S-1 to go public last week, already has 10% of its revenue — around $60m — from selling data, and this looks to increase. Users generate more data on the internet all the time (reviews, tweets, comments…) and access to this fresh data will be valuable. If this is correct, then we should expect companies to launch initiatives to collect even more valuable human-generated data to feed the models.

Antitrust: We should expect closer scrutiny on the antitrust issues raised by exclusive access to expensive sources of data such as Reddit and Elsevier. Large tech companies, with their vast resources and access to enormous datasets, will further entrench their market positions, making it difficult for smaller entities to compete.

Open source may lag behind: Regulators will need to consider how to ensure fair access to datasets, potentially treating them as public utilities or enforcing data sharing under certain conditions. Creating more high quality pruned and curated datasets will be critical for academia and the open source community to stay competitive, and national governments may make active efforts to curate a central data resource for all LLM developers to use, helping to level the playing field. For now, however, open source developers can only continue to fine-tune their LLMs on the superior models produced by the private labs, and thus open source will continue to lag behind the private labs for the foreseeable future.

Data as a public good: Some types of data can be considered a public good and thus under-invested. For example, one can imagine the creation of a public dataset that sums up human ethical preferences using comparisons; such a dataset would be a good candidate for a publicly funded or philanthropic AI project. There are many other such examples.

In the science fiction novel Dune, the psychedelic drug melange, commonly called “spice,” is the most valuable commodity in the galaxy. In light of these considerations, it’s no wonder that Elon Musk recently tweeted “data is the spice.” AI Labs are heeding his words.

[1] A researcher at OpenAI wrote an excellent blog post, “the ‘it’ in AI models is the dataset”, where he summarizes this lesson pithily: “model behavior is not determined by architecture, hyperparameters, or optimizer choices. It’s determined by your dataset, nothing else. Everything else is a means to an end in efficiently delivering compute to approximating that dataset.”

Nabeel S. Qureshi is a Visiting Scholar at Mercatus. His research focuses on the impacts of AI in the 21st century.

Clear and insightful, thanks a lot.

If synthetic data was the key to AI then the synthesizer would already be a sufficiently advanced AI. There is no such thing as a free lunch and conservation principles rule out any advances from use of synthetic data that would go above and beyond information already available in the initial data set used to train the synthesizer. This follows from basic computational principles. Any irreversible transformation applied to the source data set can only reduce its information content.