Before Congress acts on AI, it should fully understand existing legislation

If we're going to legislate, lets do this thing right.

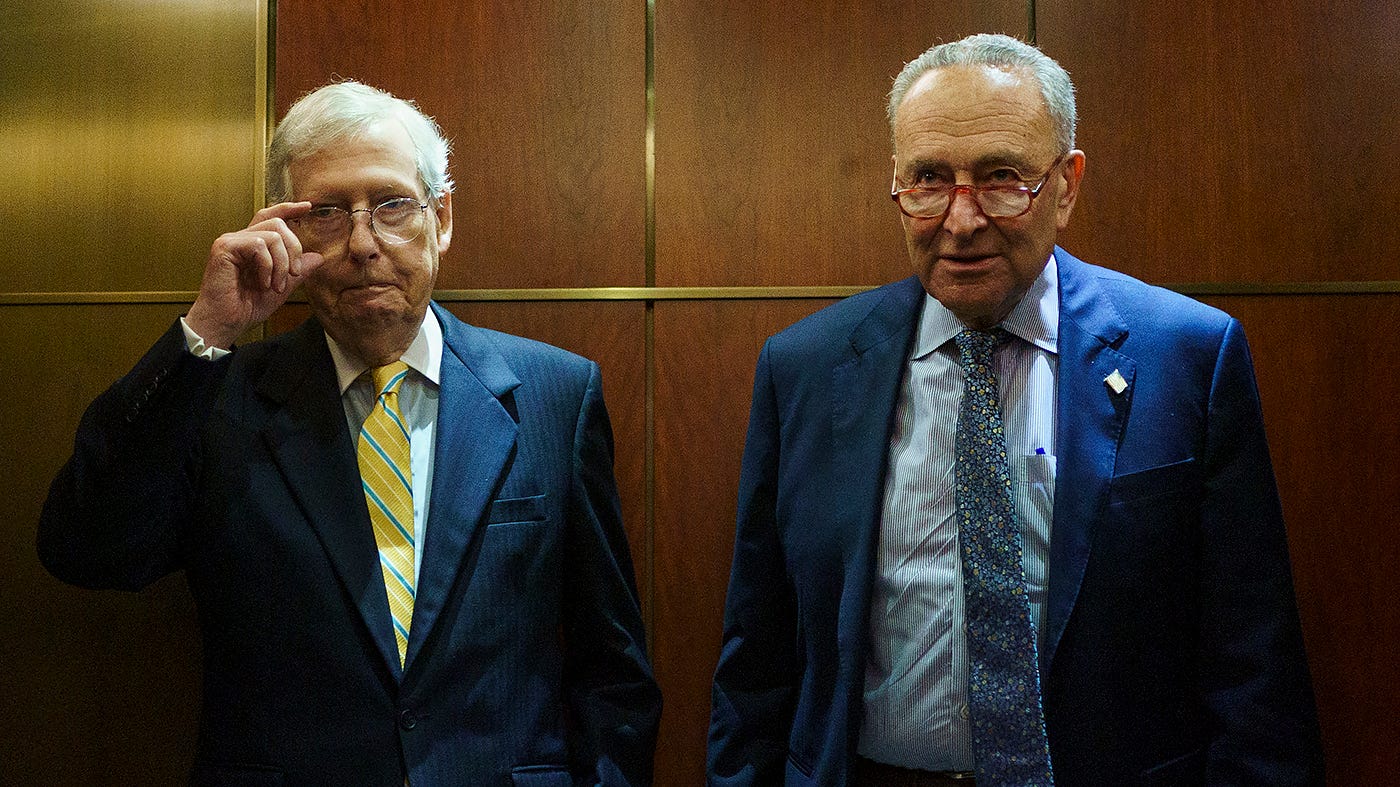

Photo credit Greg Nash and The Hill.

This piece was originally published in The Hill on July 21st, 2023. The original article can be found here.

A note on recent policy developments: The speed of AI policy means things can change in just days and weeks. While the principal of my discussion stands, the Federal Elections Commission has now voted to proceed public comment on AI generated election advertising regulations. Figuring out what regulations exist will be a somewhat messy process and involve a certain amount of waffling. This should highlight even more the importance that agencies - and by extension congress - start the process of mapping regulations so we can approach an ever-clearer picture of what’s on the books. This will not be necessarily simple, and will take time.

Let’s start today.

In June, Sen. Chuck Schumer (D-N.Y.) debuted SAFE Innovation, a framework for potential artificial intelligence (AI) legislation. While other AI legislative proposals shouldn’t be ignored, this effort is backed by bipartisan support and the powerful mandate of Senate leadership.

In this case, senators have identified a diverse grab bag of AI issues they hope to tackle, ranging from algorithmic explainability to racially biased AI decision-making to countering China’s AI competitive posture.

To the Senate’s credit, these priorities reflect many of the high-profile AI challenges faced today. These are big challenges, and new laws may be needed. That said, we must also guard against prematurely granting expansive new regulatory powers and putting the cart before the institutional horse. First, we must pause to analyze the powers our institutions already hold.

Recently, the Federal Trade Commission (FTC) launched an investigation into ChatGPT, illustrating that despite common perceptions, AI is already subject to regulation via a range of preexisting general purpose statutes. The FTC is not alone. In recent weeks, other agencies, including the Equal Employment Opportunity Commission, the Department of Justice and the Consumer Financial Protection Bureau, have emerged from the woodwork with the realization they too hold AI regulatory powers.

This gradual process of regulatory discovery puts the technology in a novel situation. There is likely a deep body of “AI Law” that already exists, but its exact size, shape and scope is yet to be established. For Congress, this creates a problem. If new legislation conflicts with, contradicts or duplicates existing regulation, the resulting mess may undermine both the safety and innovation it seeks.

It’s worth noting that the opacity of AI legal affairs is a problem that never should have existed. In 2019, the Trump administration signed an executive order requiring a subset of 41 agencies with “regulatory authorities” to review existing regulations and statutes to discover what powers they hold. This was a great idea, but unfortunately, executive commitment did not meet the effort required. Today, 80 percent of agencies have yet to comply and existing AI law remains uncertain. Just as an AI legislative window seemed wide open, executive failure left Congress driving blind.

What is the danger of proceeding without understanding existing AI law?

First, regulatory deadlock. If Congress grants agencies overlapping powers, it invites contradiction. In the 1980s, chocolate manufacturers felt this pain when both the Occupational Safety and Health Administration and the Food and Drug Administration passed conflicting rules governing manufacturing equipment materials. Resolving this chocolaty mess took officials five long years, during which time the industry and regulators were needlessly hampered by undue uncertainty.

For AI, five years is a lifetime. Imagine the economic potential we’d lose, the damaging problems that could go unaddressed and the possibilities, such as lifesaving AI-discovered drugs, we’d delay.

A second risk is the “too many cooks in the kitchen” problem. Without clear regulatory maps, Congress cannot adequately picture the mesh of regulators the industry might face. Proceeding blind risks creating a complicated, confusing, burdensome regulatory web. The result would shift research capital toward finding ways to comply rather than maximizing value for consumers, discourage market participation from all but the well-capitalized tech titans and likely depress the very innovation we need to make this as-yet imperfect technology safe and useful.

For an example of what not to do, look to financial regulation, where a mess of nine-plus competing regulators have spent decades on wasteful court fights, encouraged regulatory arbitrage and engaged in unproductive turf wars.

A final risk is unrecognized gaps. Only by first determining what regulatory bounds exist can we confidently say what is needed. This risk was illustrated last month when the Federal Election Commission (FEC) denied AI regulatory action over concerns the technology fell outside its jurisdiction. To a collection of 50 congresspeople, this came as a surprise — they believed the FEC held that authority. The lesson is that powers Congress thinks exist may not match reality.

These challenges are certainly great, so how can Congress proceed?

Thankfully, SAFE Innovation offers a natural starting point. The framework includes a series of themed “AI insight forums”— Senate-wide events that invite information gathering, collaborative learning and ideation on select issues. At present, nine themes have been announced. I suggest a 10th: the existing regulatory landscape.

During this forum, Congress can invite the heads of the 41 agencies already required to map out their AI rules, in addition to outside legal experts, to interpret the law and report existing powers. This additional effort will certainly pay dividends and provide the guiding map for success.

AI uncertainty is high, and Congress certainly wants to act. That said, before passing new legislation, we must first analyze what already exists. If we don’t, we risk safety and innovation and set society on unstable footing as we step into the AI age.